As an experienced AWS specialist transitioning to Azure, I encountered a well-known challenge in a new environment. One notable issue was the high transaction costs in Azure Table Storage. Leveraging my AWS expertise, I developed a custom solution that efficiently cleans Azure Tables while minimizing transaction expenses. In this blog post, I’ll be sharing the insights from my journey.

Archive Data Problem

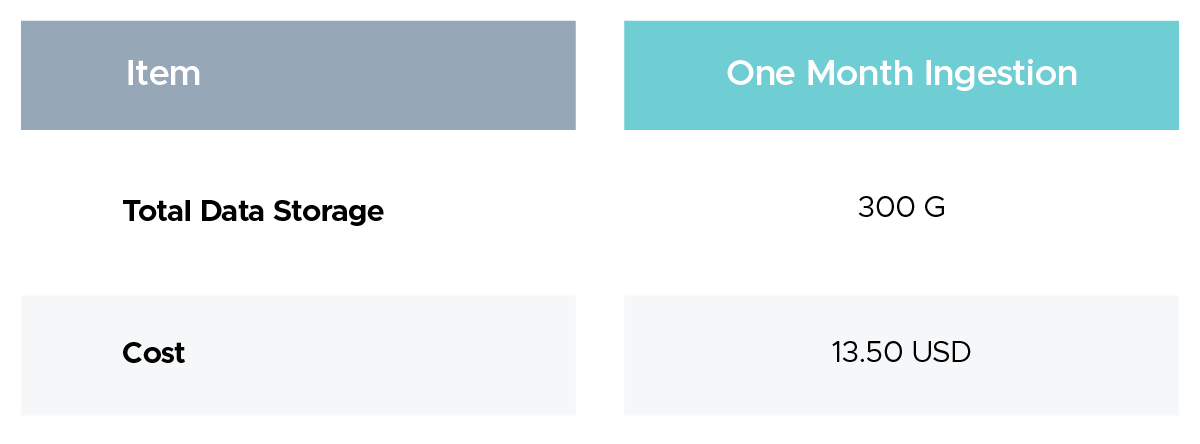

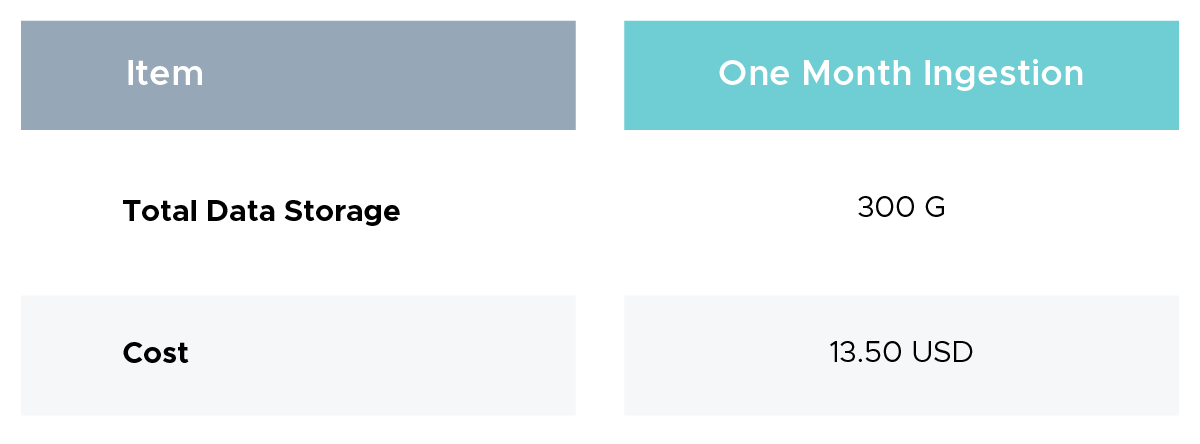

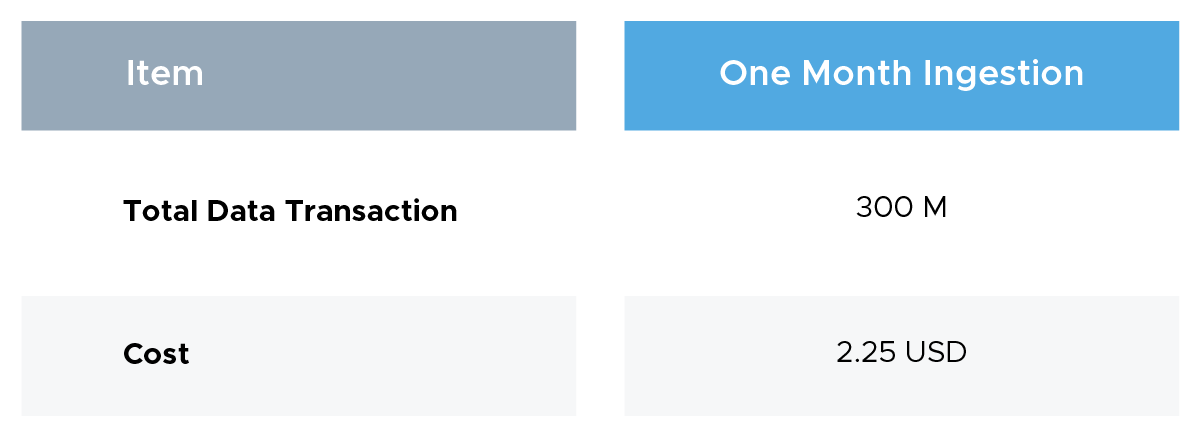

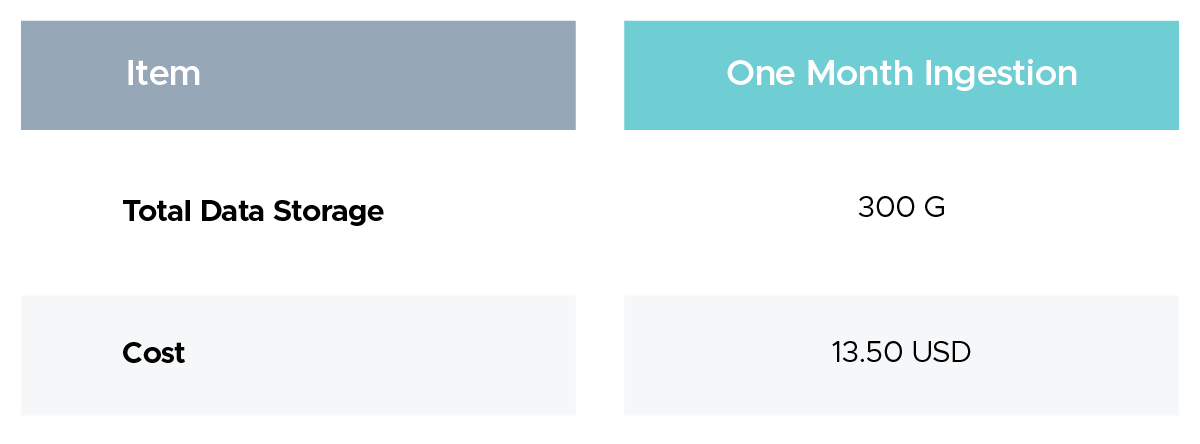

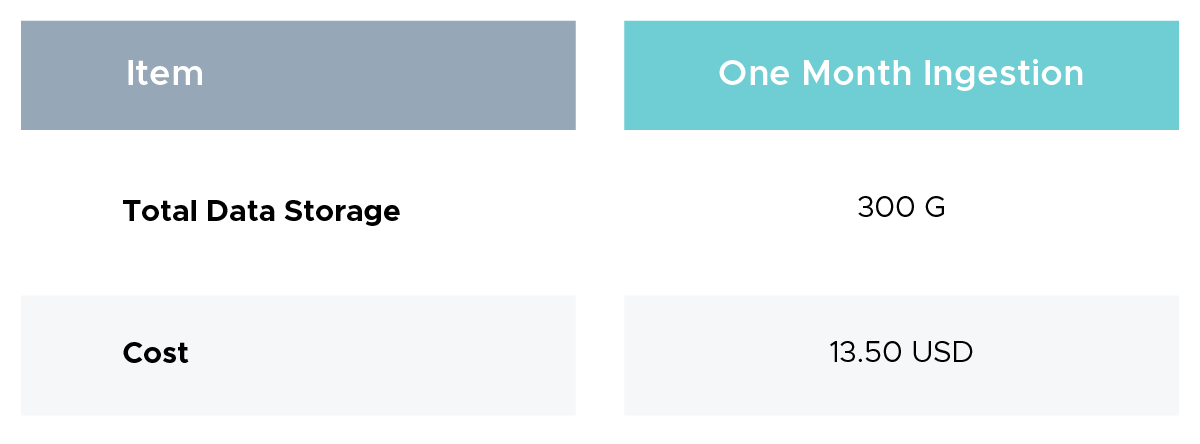

Keeping old data on the cloud can be costly and negatively impact performance. Our long-standing customers are heavily reliant on IoT devices and it deliver data to an Azure Storage Table. With over 10 million entities added daily, this has resulted in massive data collection over time. Significant effects have been observed in terms of costs and performance, especially in terms of transaction costs for data inserts, as a result of the noteworthy fact that this data has been held in the absence of retention restrictions. Optimizing these transaction costs while preserving the system's functioning is now the difficult part. Here are the current costs for the consumer to examine.

Note: The current rate is $0.0045 per GB and $46.0800 per TB

Operation and Data transfer price ($0.0004 on 10K Insert Transaction vary by tier

**Data storage and transaction pricing for account-specific key encrypted Tables that rely on a key that is scoped to the storage account to be able to configure the customer-managed key for encryption at rest.

The end goal is to lower the data transaction cost and implement a retention period of 1 month which means older data more than 1 month should be removed.

Current Total Cost over 1 year

Total Storage Cost: Increasing storage cost by 13.5 USD every month = $1053 USD (13.5 + 27 +...)

Total Transaction Cost: $144 USD

Grand Total: $165+$144=$309 USD

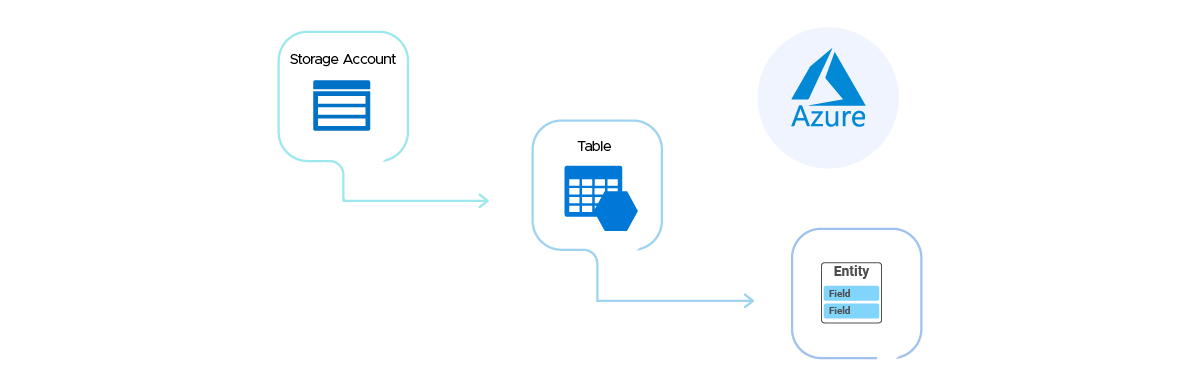

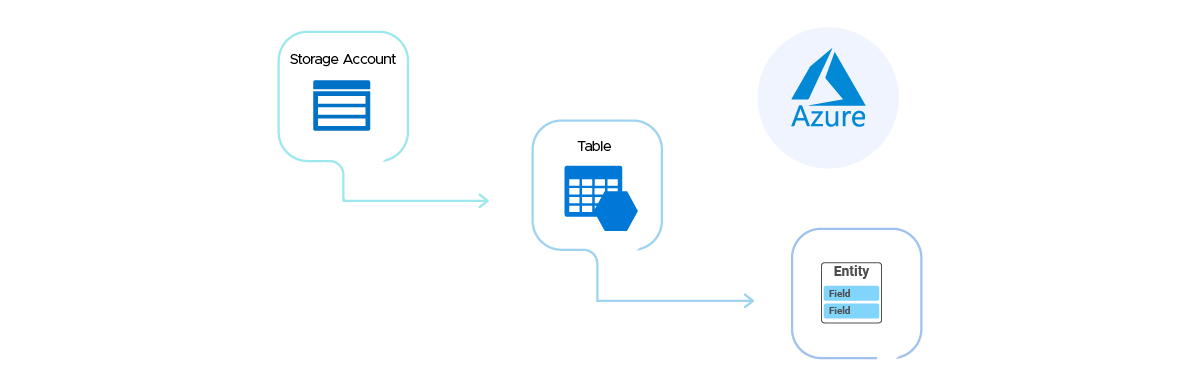

Before diving into the solution, let's briefly review Azure Table Storage.

Understanding Azure Table Storage

- It's a cloud-based NoSQL datastore for structured data

- Offers a key/attribute store with a schemaless design

- Provides fast and cost-effective access for many applications

- Ideal for storing flexible datasets like user data, device information, and metadata

- Can store terabytes of structured data

- Supports authenticated calls from inside and outside the Azure cloud

An entity group transaction must meet the following requirements:

- Every entity involved in the transaction that is subject to operations needs to have the same PartitionKey value.

- In a transaction, an entity can only appear once and can only be the target of one operation.

- The transaction's overall payload size cannot exceed 4 MiB, and it can contain up to 100 entities in total.

- All entities are subject to the limitations described in Understanding the Table Service Data Model.

Crafting a Custom Solution

We had a discussion with the customer and it turns out they are only doing insertion in the table and not using the data after a month. Also, every insertion in the Azure table was of 2KB and doing single insertions which is costing a lot.

So we buffered all rows to be inserted with a batch of 2000 and used Batch write transactions.

To delete older data, I leveraged my AWS experience to create a custom solution for removing outdated records from Azure Tables.

Data Identification: Leveraging timestamps and partition keys to efficiently locate outdated entries.

Here’s a snippet of the data identification logic older than 6 months:

from azure.data.tables import TableServiceClient

from datetime import datetime, timedelta

storage_account_name = "YOUR_ACCOUNT"

storage_account_key = "YOUR_KEY"

# Create a TableServiceClient using the account name and key

connection_string = f"DefaultEndpointsProtocol=https;AccountName={storage_account_name};AccountKey={storage_account_key};TableEndpoint=https://{storage_account_name}.table.core.windows.net/"

table_service_client = TableServiceClient.from_connection_string(conn_str=connection_string)

# Time threshold (1 Month ago)

days_to_keep = 1

threshold_date = datetime.utcnow() - timedelta(days=days_to_keep * 30)

threshold_date_str = threshold_date.isoformat() |

Deletion Strategy: Using the Azure Python SDK to automate the deletion process, inspired by AWS Boto3.

Here's a snippet of the core deletion logic:

# Query entities to delete

query_filter_old = f"Timestamp lt datetime'{threshold_date_str}'"

old_entities = table_client.query_entities(query_filter=query_filter_old)

for entity in old_entities:

table_client.delete_entity(entity['PartitionKey'], entity['RowKey'])

print(f"Deleted entity: PartitionKey={entity['PartitionKey']}, RowKey={entity['RowKey']} from table '{table_name}'.") |

This automated approach allows for periodic cleanups without manual intervention, keeping tables lean and efficient.

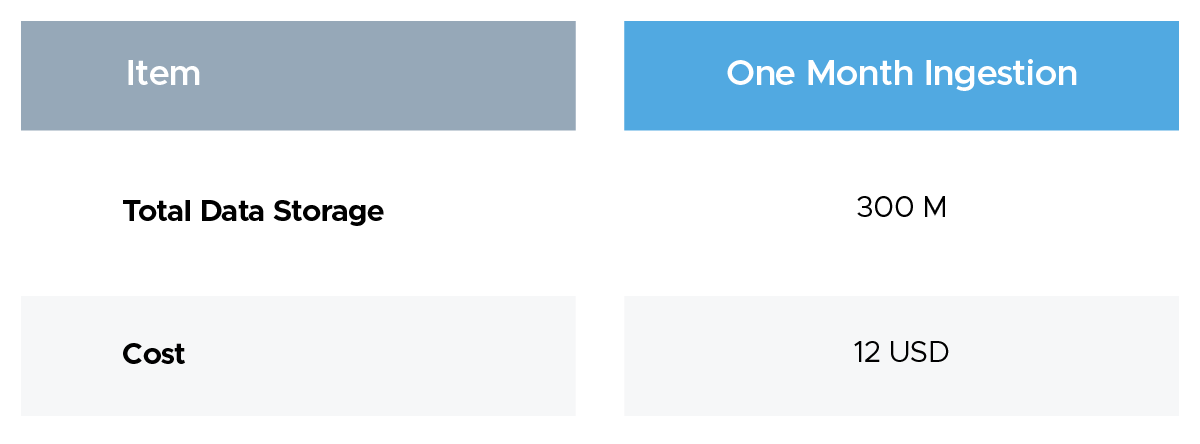

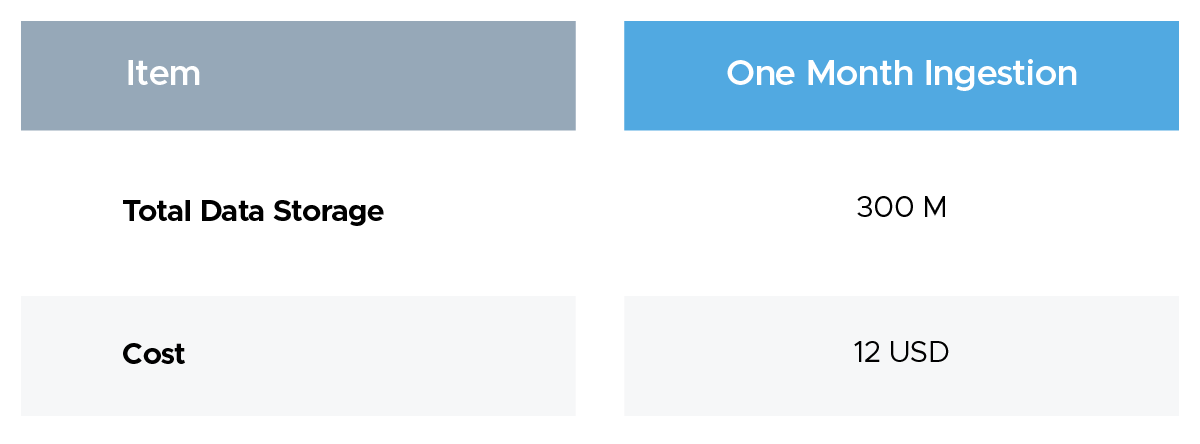

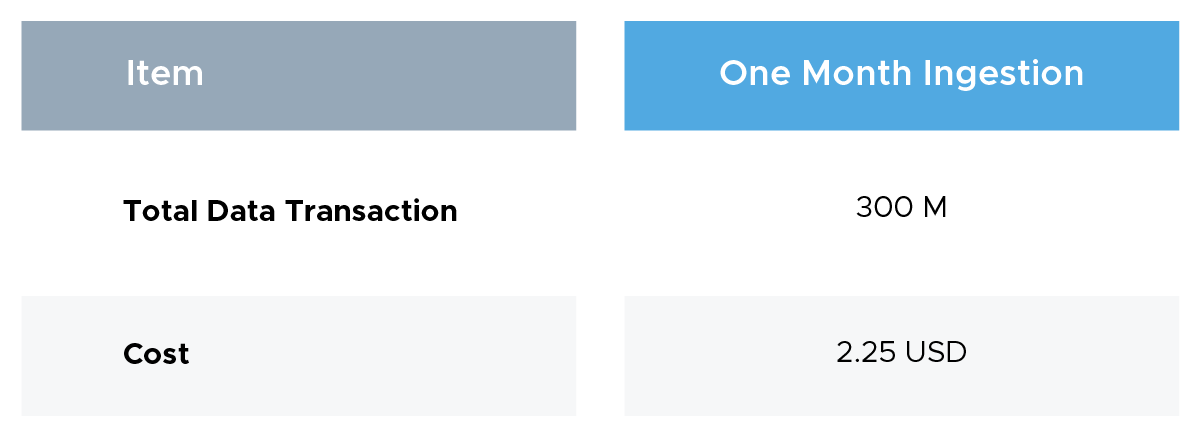

Now, the current cost looks like this:

Note: The current rate is 0.0045 per GB and 46.0800 per TB

Operation and Data transfer price (0.075 on 10K Batch Insert Transaction vary by tier)

New Total Cost Over 1 Year

- Total Storage Cost: $162 USD

- Total Transaction Cost: $27 USD

Grand Total: $162+$27=$189 USD

Cost Savings

Total cost savings after deleting data every year= $1053 -$162 = $891

Total cost savings from moving to single insertion to batched insertion = $144 - $27 = $117

Total cost savings = ($1053+$144) - ($162 + $27) = $1008 (84.21% cost savings)

You would save about 84 % on your bill if you implement a tailored retention solution to remove data on a monthly basis and use batch transactions. With this method, regular data maintenance is possible and storage and transaction costs are greatly reduced.

Benefits of the Custom Solution

- Cost Reduction: Reduction in storage expenses for out-of-date entries was significant

- Performance Improvement: Significant increases in the speed of data retrieval and queries.

- Automation: The reduction of manual intercept in data management activities.

- Scalability: The ability to modify the solution to accommodate increasing volumes of data.

Conclusion

Transitioning from AWS to Azure presented unique challenges as well as opportunities to leverage cross-platform experience. We have not only resolved a specific problem with Azure Table Storage by developing this innovative solution and optimizing the cost, but we have also demonstrated that cloud expertise is transferable to several platforms.

Regardless of the platform you are using, effective data management is essential to maximizing your cloud's performance and expenses.

1]: Please check the most recent price as it may vary: official documentation

Code for Reference:

from azure.data.tables import TableServiceClient

from datetime import datetime, timedelta

storage_account_name = "YOUR_ACCOUNT"

storage_account_key = "YOUR_KEY" # Replace with your actual key

# Create a TableServiceClient using the account name and key

connection_string = f"DefaultEndpointsProtocol=https;AccountName={storage_account_name};AccountKey={storage_account_key};TableEndpoint=https://{storage_account_name}.table.core.windows.net/"

table_service_client = TableServiceClient.from_connection_string(conn_str=connection_string)

# Time threshold (6 Month ago)

days_to_keep = 6

threshold_date = datetime.utcnow() - timedelta(days=days_to_keep * 30)

#threshold_date = datetime.utcnow() - timedelta(hours=days_to_keep)

threshold_date_str = threshold_date.isoformat()

# Get all tables in the storage account

all_tables = table_service_client.list_tables()

# Ask user for a table name or to apply to all tables

table_name_input = input("Enter a table name to delete old entities or press Enter to apply to all tables: ").strip()

# Prepare to collect entities to delete

to_delete_entities = []

# Function to process tables

def process_table(table_name):

table_client = table_service_client.get_table_client(table_name)

# Query entities with Timestamp older than the threshold

query_filter_old = f"Timestamp lt datetime'{threshold_date_str}'"

old_entities = table_client.query_entities(query_filter=query_filter_old)

# Collect old entities for review

entity_count = sum(1 for _ in old_entities) # Count old entities

return entity_count

# Process specified table or all tables

if table_name_input:

entity_count = process_table(table_name_input)

table_names = [table_name_input]

else:

table_names = [table.name for table in all_tables]

entity_count = sum(process_table(table_name) for table_name in table_names)

# Display the results

print(f"\nRetention Period: {days_to_keep} Months")

print(f"Number of entities older than {days_to_keep} Months: {entity_count}")

# Ask for user confirmation before proceeding with deletion

confirm = input("\nDo you want to delete these entities? (yes/no): ").strip().lower()

if confirm == 'yes':

# Delete old entities for specified or all tables

for table_name in table_names:

table_client = table_service_client.get_table_client(table_name)

# Query entities again to delete them

query_filter_old = f"Timestamp lt datetime'{threshold_date_str}'"

old_entities = table_client.query_entities(query_filter=query_filter_old)

for entity in old_entities:

table_client.delete_entity(entity['PartitionKey'], entity['RowKey'])

print(f"Deleted entity: PartitionKey={entity['PartitionKey']}, RowKey={entity['RowKey']} from table '{table_name}'.")

print("Deletion completed.")

else:

print("Deletion aborted.") |